- Trolley problem scenarios, a staple of ethical discussions, are designed to explore the intricacies of moral decision-making.

- These thought experiments raise fundamental questions about our moral intuitions, values, and the principles that guide our ethical judgments.

- Empathy and compassion: People’s decisions in trolley problems frequently demonstrate a deep-seated concern for minimizing harm and suffering, driven by empathetic responses.

- Fear and disgust: Emotional reactions such as fear or disgust may influence moral judgments, especially in scenarios involving direct physical harm or close personal involvement.

- Utilitarian calculations: The scenarios often bring to light utilitarian principles, where the moral action is considered the one that maximizes overall happiness or minimizes suffering.

- Conflict of values: These scenarios often present situations where important values conflict, such as the value of saving lives versus the value of not actively causing harm.

Table of Contents: (Click any link below to navigate to that section.)

- What do trolley problem scenarios reveal? Emotion-emergent values? Objective moral facts? Something else?

- Does the complexity of the trolley problem strongly suggest there is no objective moral standard readily accessible to humans and that “moral intuitions” are actually emotional dispositions?

- Can AI agents now or in the future contribute to meaningful insights into trolley problem “solutions”? Why or why not?

- Can you produce an example of a computational ethics calculus related to the trolley problem?

- Quizzes

- Provide 15 discussion questions relevant to the content above.

What do trolley problem scenarios reveal? Emotion-emergent values? Objective moral facts? Something else?

What Do Trolley Problem Scenarios Reveal?

Trolley problem scenarios, a staple of ethical discussions, are designed to explore the intricacies of moral decision-making. These thought experiments raise fundamental questions about our moral intuitions, values, and the principles that guide our ethical judgments.

1. Emotion-Emergent Values

Trolley problem scenarios often highlight the role of emotion-emergent values in ethical decision-making. When individuals are faced with these dilemmas, their emotional responses can reveal underlying values and priorities:

- Empathy and Compassion: People’s decisions in trolley problems frequently demonstrate a deep-seated concern for minimizing harm and suffering, driven by empathetic responses.

- Fear and Disgust: Emotional reactions such as fear or disgust may influence moral judgments, especially in scenarios involving direct physical harm or close personal involvement.

- Moral Intuitions: These scenarios can expose intuitive judgments that might not be easily articulated but strongly influence decision-making processes.

2. Objective Moral Facts

While trolley problem scenarios primarily illuminate personal and societal values, they also prompt discussions about the existence and nature of objective moral facts:

- Utilitarian Calculations: The scenarios often bring to light utilitarian principles, where the moral action is considered the one that maximizes overall happiness or minimizes suffering.

- Deontological Principles: Conversely, these problems also highlight deontological ethics, where the morality of an action is judged based on adherence to rules or duties, regardless of the outcomes.

- Moral Universality: Debates arise over whether there are universal moral truths that can be applied consistently across different contexts, or if moral judgments are inherently context-dependent.

3. Something Else: Moral Complexity

Beyond emotion-emergent values and objective moral facts, trolley problem scenarios underscore the complexity of moral reasoning:

- Conflict of Values: These scenarios often present situations where important values conflict, such as the value of saving lives versus the value of not actively causing harm.

- Contextual Sensitivity: The details of each scenario can significantly affect moral judgments, revealing the importance of context in ethical decision-making.

- Moral Growth: Engaging with these thought experiments can foster moral growth, encouraging individuals to reflect on and refine their ethical beliefs and principles.

Conclusion

Trolley problem scenarios serve as a rich tool for exploring the nuances of moral reasoning. They reveal the profound impact of emotions on our values, challenge the notion of objective moral facts, and highlight the inherent complexity of ethical decision-making. By examining these scenarios, individuals can gain deeper insights into their own moral intuitions and the philosophical foundations of their ethical beliefs.

Trolley Problem Scenarios: A Window into Moral Intuition

The Trolley Problem: A Brief Overview

The trolley problem is a classic thought experiment in ethics that presents individuals with a moral dilemma. Typically, it involves a runaway trolley hurtling towards a group of people. The individual has the option to divert the trolley onto a different track, saving the first group but killing a smaller number of people on the alternate track. Variations of the problem introduce different complexities, such as the nature of the individuals involved (strangers, loved ones), the means of intervention (pulling a lever, pushing someone in front of the trolley), and the number of lives at stake.

What Do Trolley Problems Reveal?

Trolley problem scenarios offer a fascinating glimpse into human moral reasoning and decision-making. While they don’t provide definitive answers about the nature of morality, they do illuminate several key aspects:

Emotion-Emergent Values

- Gut Reactions: Many people experience strong emotional responses to trolley problems, suggesting that our moral intuitions are deeply intertwined with our emotions. These emotional reactions can influence our decisions more than purely rational calculations.

- Prioritization of Life: The trolley problem often highlights our inherent value of human life. The desire to save as many lives as possible is a common response, even if it involves causing harm to an individual.

- Distinction Between Action and Inaction: Some variations of the trolley problem reveal a psychological aversion to actively causing harm, even if the outcome is the same as inaction. This suggests that our moral judgments are influenced by how we perceive our role in bringing about harm.

Objective Moral Facts (or Lack Thereof)

- Moral Relativism: The wide range of responses to trolley problems suggests that there might not be universal, objective moral truths. Different individuals may prioritize different values (e.g., the sanctity of life versus the greatest good for the greatest number) leading to varying moral judgments.

- Contextual Factors: The specific details of a trolley problem can significantly impact our moral intuitions. This highlights the role of context in moral decision-making and challenges the idea of simple, universal moral principles.

- Cognitive Biases: Our moral judgments can be influenced by cognitive biases such as framing effects and loss aversion. These biases can distort our perception of the situation and impact our choices.

Beyond Emotion and Objectivity

- Moral Complexity: Trolley problems demonstrate that morality is often complex and multifaceted. It involves a delicate balance of reason, emotion, and intuition.

- Interpersonal Dynamics: Real-life moral dilemmas often involve relationships, social norms, and power dynamics, which are not captured in the simplified scenarios of trolley problems.

- Cultural Differences: Cross-cultural studies on trolley problems suggest that moral intuitions can vary across different societies, reflecting the influence of cultural values and norms.

Conclusion

While trolley problems cannot definitively answer the age-old question of whether morality is based on emotions or objective facts, they serve as valuable tools for exploring the complexities of human moral reasoning. By revealing the interplay between emotions, cognition, and context, trolley problems encourage critical thinking about our moral values and the factors that shape our decisions.

Ultimately, the trolley problem may not provide definitive answers, but it does offer a rich ground for philosophical and psychological inquiry, as well as for stimulating conversations about the nature of morality.

Trolley problem scenarios reveal several important aspects of moral reasoning and decision-making:

1. Conflict between moral intuitions and utilitarian reasoning

Trolley problems often pit our immediate moral intuitions against more calculated utilitarian thinking. For example, many people intuitively feel it’s wrong to push someone in front of a trolley to save five others, even though this action would maximize lives saved. This reveals:

- The strength of our intuitive moral responses

- Potential inconsistencies in our moral reasoning

- The tension between deontological (rule-based) and consequentialist ethics

2. Context-dependence of moral judgments

Slight variations in trolley scenarios can lead to dramatically different moral judgments. This suggests:

- Our moral decision-making is highly sensitive to context

- Abstract moral principles may not fully capture the nuances of real-world ethical dilemmas

3. Role of emotion in moral reasoning

The visceral nature of some trolley scenarios (e.g., physically pushing someone) tends to evoke stronger emotional responses, which often correlate with different moral judgments. This points to:

- The significant role of emotion in moral decision-making

- Potential limitations of purely rational approaches to ethics

4. Limits of moral absolutes

Trolley problems often force us to choose between two undesirable outcomes, challenging the notion of clear-cut, universal moral rules. This suggests:

- The complexity of real-world ethical situations

- Potential shortcomings of absolutist moral frameworks

5. Individual differences in moral reasoning

Responses to trolley problems vary among individuals and across cultures, revealing:

- Diversity in moral intuitions and ethical frameworks

- The potential influence of cultural and personal factors on moral judgments

6. The challenge of moral decision-making under uncertainty

Many trolley scenarios involve incomplete information or probabilistic outcomes, highlighting:

- The role of risk assessment in moral reasoning

- The difficulty of making ethical decisions with imperfect information

Rather than definitively revealing objective moral facts or purely emotion-emergent values, trolley problems primarily serve as thought experiments that:

- Expose the complexities and potential inconsistencies in our moral reasoning

- Highlight the interplay between emotion, intuition, and rational calculation in ethical decision-making

- Challenge us to critically examine and refine our moral principles

These scenarios are valuable tools for exploring ethical frameworks and stimulating moral discourse, but they should not be seen as definitive tests of moral truth or as direct analogues to real-world ethical dilemmas.

Does the complexity of the trolley problem strongly suggest there is no objective moral standard readily accessible to humans and that “moral intuitions” are actually emotional dispositions?

The Complexity of the Trolley Problem and Its Implications

The intricate nature of the trolley problem scenarios brings to light several significant points regarding the existence and accessibility of objective moral standards and the role of moral intuitions.

1. Complexity and Objective Moral Standards

The complexity inherent in trolley problem scenarios does suggest challenges in identifying an objective moral standard that is readily accessible to humans:

- Context Sensitivity: The varying details and contexts of each trolley problem scenario often lead to different moral judgments, indicating that a single, objective moral standard may not be easily applied in every situation.

- Moral Pluralism: The differing ethical principles (such as utilitarianism and deontology) that can be applied to these scenarios reflect a plurality of moral frameworks, complicating the idea of one universally accessible objective moral standard.

- Philosophical Disagreement: The persistent debates among philosophers regarding the correct resolution of trolley problems further underscore the difficulty in pinpointing a clear, objective moral standard.

2. Moral Intuitions as Emotional Dispositions

The role of moral intuitions in trolley problem scenarios suggests they may indeed be closely tied to emotional dispositions:

- Emotional Influence: Studies have shown that individuals’ emotional responses play a significant role in shaping their moral judgments in trolley problem scenarios. For example, feelings of empathy, fear, or guilt can drive different decisions.

- Intuitive Reactions: The quick, automatic nature of many moral intuitions suggests they are more akin to emotional reactions than reasoned, objective judgments.

- Variability and Subjectivity: The variability in moral intuitions among different people and cultures further supports the idea that these intuitions are influenced by subjective emotional factors rather than objective moral truths.

3. Implications for Moral Philosophy

The implications of these observations for moral philosophy are profound:

- Ethical Subjectivism: The apparent influence of emotions on moral intuitions supports the view that moral judgments may be inherently subjective, based on individual or cultural emotional dispositions rather than objective standards.

- Need for Reflective Equilibrium: Philosophers like John Rawls advocate for a method of reflective equilibrium, where individuals strive to balance their moral intuitions with ethical principles through reasoned reflection. This approach acknowledges the role of emotions while seeking a more coherent moral framework.

- Challenge to Moral Realism: The complexity and emotional basis of moral intuitions challenge the position of moral realism, which holds that there are objective moral facts independent of human beliefs or emotions.

Conclusion

The complexity of trolley problem scenarios and the significant role of emotional dispositions in shaping moral intuitions strongly suggest that there may not be an easily accessible objective moral standard. Instead, moral intuitions appear to be closely linked to emotional responses, highlighting the subjective nature of moral judgments. This understanding calls for a nuanced approach to moral philosophy, one that acknowledges the influence of emotions while striving for coherence and consistency in ethical reasoning.

The Trolley Problem and the Question of Objective Morality

The complexity of the trolley problem strongly suggests a lack of readily accessible, objective moral standards for humans.

Here’s a breakdown of why:

1. The Variability of Responses:

- Diverse Moral Intuitions: Different individuals often arrive at vastly different conclusions for the same trolley problem scenario. This suggests that there’s no universally agreed-upon, objective moral principle to guide decision-making.

- Cultural Differences: Cross-cultural studies reveal significant variations in moral judgments, further supporting the idea that morality is not a fixed, objective standard.

2. The Role of Emotion:

- Emotional Impact: Trolley problems often evoke strong emotional responses, influencing decision-making. This highlights the significant role of emotions in moral judgments.

- Gut Reactions: Many people rely on their initial, intuitive reactions rather than a carefully reasoned moral code. This suggests that “moral intuitions” might be more akin to emotional dispositions than rational calculations.

3. The Challenge to Moral Theories:

- Utilitarianism vs. Deontology: Even established moral theories like utilitarianism (maximizing overall happiness) and deontology (following moral rules) struggle to provide clear-cut answers to trolley problems. This indicates that real-world moral dilemmas are often more complex than these theories can adequately address.

4. Contextual Dependence:

- Scenario Variations: Slight changes in the trolley problem scenario can dramatically alter people’s moral judgments. This emphasizes the importance of context and individual circumstances in shaping moral decisions, rather than relying on abstract, objective principles.

While these factors strongly suggest a lack of readily accessible, objective moral standards, it’s important to note:

- Moral Progress: Societies can develop shared moral values and norms over time, even if they lack a purely objective foundation.

- Reason and Reflection: While emotions play a significant role, reason and reflection can also influence moral decision-making.

- Nuance: The relationship between emotions and morality is complex and may not be as straightforward as “emotional dispositions.”

In conclusion, the trolley problem serves as a powerful tool for exploring the complexities of human morality. While it doesn’t definitively prove the absence of objective moral standards, it strongly suggests that morality is a multifaceted phenomenon influenced by a combination of emotions, reason, culture, and context.

This is a profound question that touches on core issues in metaethics and moral psychology. The complexity of trolley problems does indeed raise some challenges for the idea of readily accessible objective moral standards, but we should be cautious about drawing overly strong conclusions. Let’s break this down:

Arguments supporting your suggestion:

- Variability in responses: The wide variation in people’s judgments about trolley problems, both within and across cultures, could suggest that there isn’t a clear, universally accessible moral standard.

- Emotional influence: The strong role that emotions play in shaping responses to trolley dilemmas might indicate that our “moral intuitions” are more akin to emotional dispositions than rational insights into objective moral truths.

- Framing effects: The fact that slight changes in how a trolley problem is framed can lead to dramatically different moral judgments suggests that our moral intuitions may be more malleable and less grounded in objective facts than we might hope.

- Difficulty in resolution: The persistent challenges in resolving trolley problems through philosophical argument could indicate that there isn’t an objective moral standard that we can readily access and apply.

Counter-arguments and nuances:

- Complexity doesn’t negate objectivity: The complexity of moral dilemmas doesn’t necessarily mean there are no objective moral truths. It might just reflect the difficulty in discerning and applying those truths in complex situations.

- Emotional dispositions can track moral truths: Even if our moral intuitions are heavily influenced by emotions, this doesn’t preclude them from tracking objective moral facts. Emotions might be how we perceive or process moral truths, similar to how our sensory perceptions help us understand physical reality.

- Convergence on some issues: While there’s disagreement on many trolley problems, there’s also significant convergence on some basic moral issues across cultures, which could suggest some level of objective moral truth.

- Ethical reasoning can refine intuitions: Our initial emotional responses to trolley problems can often be refined through careful ethical reasoning, suggesting that we can move beyond pure emotional dispositions.

- Limits of trolley problems: Trolley problems are artificial scenarios designed to probe edge cases in our moral thinking. Their complexity might not accurately reflect the accessibility of moral truths in more common situations.

Conclusion:

While the complexity of trolley problems does pose challenges for the idea of readily accessible objective moral standards, it doesn’t definitively prove their non-existence. It does, however, strongly suggest that:

- If objective moral standards exist, discerning them in complex situations is extremely challenging for humans.

- Our moral intuitions are heavily influenced by emotional dispositions, even if they’re not reducible to them.

- Careful ethical reasoning and examination of our intuitions is crucial for developing a more refined moral understanding.

The relationship between our moral intuitions, emotional dispositions, and potential objective moral truths remains a complex and contentious issue in philosophy. Trolley problems serve as valuable tools for exploring these relationships, but they likely can’t provide definitive answers to these deep metaethical questions on their own.

Can AI agents now or in the future contribute to meaningful insights into trolley problem “solutions“? Why or why not?

AI Agents and Trolley Problem Solutions

AI agents, with their advanced computational capabilities and potential for unbiased analysis, present intriguing possibilities for contributing to meaningful insights into trolley problem solutions. However, their effectiveness and the nature of their contributions depend on several factors.

1. Current Capabilities

AI agents today have certain strengths and limitations when it comes to analyzing trolley problems:

- Data Analysis: AI can analyze vast amounts of data, including human responses to trolley problems, to identify patterns and trends. This can provide insights into common moral intuitions and variations across different demographics.

- Simulation and Modeling: AI can simulate countless variations of trolley problem scenarios, exploring the outcomes of different ethical principles applied in diverse contexts.

- Objective Evaluation: AI can apply logical consistency to evaluate the outcomes of ethical theories without emotional bias, potentially highlighting inconsistencies in human moral reasoning.

However, there are significant limitations:

- Lack of Human Experience: Current AI lacks the subjective experience and emotional depth that underpins human moral intuitions, making it difficult for AI to fully grasp the nuances of human ethical decision-making.

- Ethical Framework Limitations: AI’s analysis is limited by the ethical frameworks programmed into it, which are based on human-designed algorithms that may not encompass the full complexity of moral philosophy.

2. Future Potential

Looking ahead, AI agents could potentially make more substantial contributions to trolley problem solutions:

- Advanced Machine Learning: Future AI with more sophisticated machine learning algorithms could better model and understand human emotional and moral responses, providing deeper insights into the psychological underpinnings of ethical decisions.

- Integrated Ethical Reasoning: AI could be designed to integrate multiple ethical frameworks, providing a more holistic analysis of trolley problems and offering balanced solutions that consider various moral principles.

- Human-AI Collaboration: AI agents could serve as tools to augment human ethical reasoning, helping individuals and ethicists explore complex moral scenarios by providing diverse perspectives and highlighting potential consequences of different decisions.

3. Ethical and Philosophical Considerations

There are important ethical and philosophical considerations regarding the role of AI in moral decision-making:

- Autonomy and Accountability: Relying on AI for ethical decisions raises questions about human autonomy and accountability. Moral decisions have profound implications, and delegating them to AI agents may undermine personal and collective moral responsibility.

- Transparency and Bias: AI systems must be transparent and free from bias to be trusted in ethical decision-making. Ensuring that AI algorithms are designed and implemented ethically is crucial to their meaningful contribution.

- Philosophical Debate: The role of AI in ethics is itself a philosophical question. Some argue that moral reasoning is inherently human and that AI, no matter how advanced, cannot replicate the full scope of human ethical intuition and deliberation.

Conclusion

AI agents, both now and in the future, can contribute to meaningful insights into trolley problem solutions by analyzing data, simulating scenarios, and evaluating ethical principles. However, their current limitations and the complex nature of human morality mean that AI should complement, rather than replace, human ethical reasoning. As AI technology advances, it holds the potential to provide deeper insights and support more informed ethical decision-making, but it must be approached with careful consideration of its ethical implications and limitations.

AI and the Trolley Problem: A Complex Relationship

AI agents could potentially contribute to meaningful insights into trolley problem “solutions” in a few ways, but it’s crucial to understand the limitations:

Potential Contributions:

- Data Analysis and Pattern Recognition: AI can analyze vast datasets of human responses to trolley problems, identifying patterns and trends in decision-making. This could reveal biases, cultural differences, or cognitive factors influencing moral judgments.

- Modeling Human Decision-Making: By developing sophisticated models of human cognition and emotion, AI can simulate how people might respond to different trolley problem scenarios. This could help us understand the underlying psychological processes involved in moral reasoning.

- Exploring Alternative Frameworks: AI can be used to explore novel ethical frameworks or decision-making algorithms that might offer different perspectives on the trolley problem. This could lead to new insights and potential solutions.

Limitations:

- Lack of True Understanding: While AI can process information and identify patterns, it lacks genuine understanding of human values, emotions, and the complexities of moral reasoning.

- Overreliance on Data: AI’s ability to provide insights is heavily dependent on the quality and quantity of data available. Biases in the data could lead to misleading conclusions.

- Ethical Concerns: Using AI to study moral dilemmas raises ethical questions about the potential misuse of such technology and the implications for human autonomy.

Ultimately, AI is a tool that can assist in exploring the trolley problem, but it’s unlikely to provide definitive answers or replace human judgment. The trolley problem is a complex philosophical question that delves into the nature of morality, which is deeply rooted in human experience and culture.

AI agents, both current and future, have the potential to contribute meaningful insights into trolley problem “solutions,” but with important caveats and limitations. Let’s explore this:

Potential contributions of AI:

- Data analysis and pattern recognition

AI can analyze vast datasets of human responses to trolley problems, potentially revealing patterns or consistencies in moral intuitions that humans might miss. - Logical consistency checks

AI systems can rigorously check the logical consistency of ethical frameworks when applied to various trolley scenarios, highlighting potential contradictions or unexpected implications. - Simulation and modeling

AI could create and run complex simulations of trolley-like scenarios, exploring a much wider range of variables and outcomes than humans could manually consider. - Unbiased analysis

AI, if properly designed, might approach trolley problems without some of the emotional biases that affect human reasoning, potentially offering a different perspective. - Interdisciplinary integration

Advanced AI could integrate insights from philosophy, psychology, neuroscience, and other relevant fields to provide a more holistic analysis of trolley problems.

Limitations and challenges:

- Lack of genuine moral experience

AI doesn’t have subjective experiences or emotions, which many argue are crucial for true moral understanding. This could limit its ability to fully grasp the moral weight of decisions in trolley problems. - Value alignment problem

The ethical framework of an AI system is ultimately derived from human inputs, which means it may inherit human biases or reflect the values of its creators rather than discovering objective moral truths. - Opacity of decision-making

Some AI systems, particularly neural networks, may reach conclusions through processes that are not easily interpretable by humans, which could limit their usefulness in ethical discussions. - Difficulty with novel scenarios

While AI excels at analyzing patterns in existing data, it may struggle with completely novel ethical scenarios that require genuine moral reasoning rather than pattern recognition. - Inability to generate new moral principles

Current AI systems are limited in their ability to generate truly novel moral principles or ethical frameworks, which may be necessary for “solving” trolley problems.

Why AI insights could be meaningful:

- Computational ethics

AI could help develop and test formal models of ethics, potentially leading to new insights in moral philosophy. - Revealing hidden assumptions

By approaching trolley problems from a different “perspective,” AI might reveal hidden assumptions in human moral reasoning. - Ethical decision support

In real-world scenarios analogous to trolley problems (e.g., autonomous vehicle decision-making), AI insights could inform the development of ethical guidelines.

Why AI insights might fall short:

- Philosophical limitations

Some argue that truly meaningful ethical insights require qualities that AI fundamentally lacks, such as consciousness or genuine understanding. - The is-ought problem

AI analysis of what people do or think about trolley problems doesn’t necessarily tell us what we ought to do, which is the core philosophical question. - Moral uncertainty

The difficulty in defining objective moral standards for AI to work with may limit the meaningfulness of its contributions.

Conclusion:

AI agents can potentially contribute meaningful insights into trolley problem analysis, particularly in areas involving data analysis, consistency checking, and exploring complex scenarios. However, these contributions are likely to be tools for human moral reasoning rather than definitive “solutions” to trolley problems.

The most promising approach may be a collaborative one, where AI systems augment human moral reasoning, helping us explore the implications of our ethical intuitions and frameworks more rigorously. However, the fundamental questions at the heart of trolley problems – about the nature of morality, the weighing of different moral considerations, and the basis for ethical decision-making – will likely remain in the realm of human philosophical inquiry and debate for the foreseeable future.

As AI systems become more advanced, their potential contributions to ethical reasoning will likely grow, but so too will the ethical questions surrounding their use in this domain. This makes ongoing research and discussion about the role of AI in moral philosophy crucial.

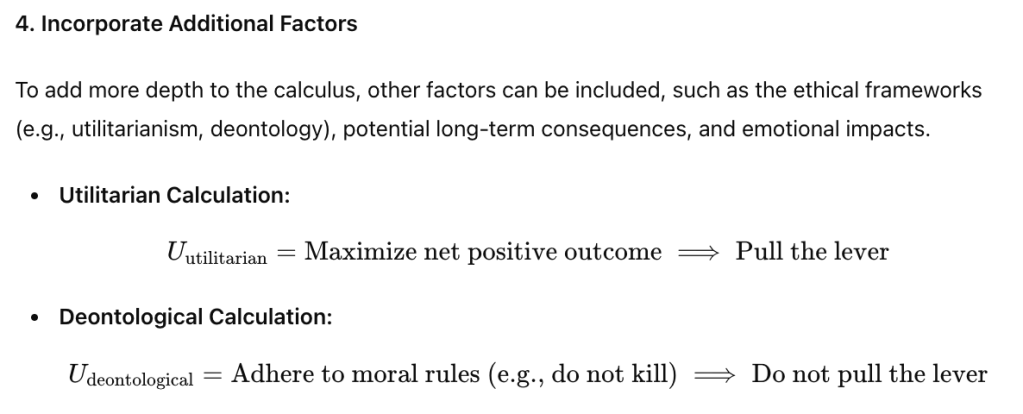

Can you produce an example of a computational ethics calculus related to the trolley problem?

Computational Ethics Calculus for the Trolley Problem

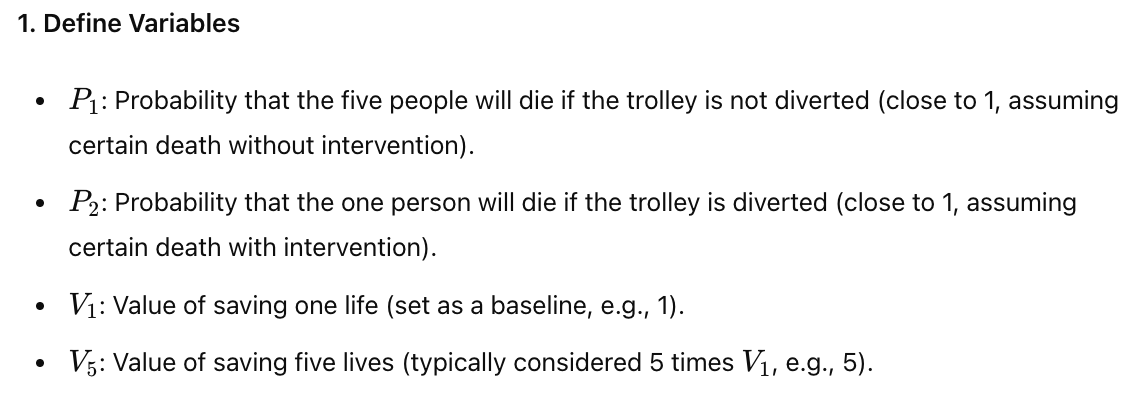

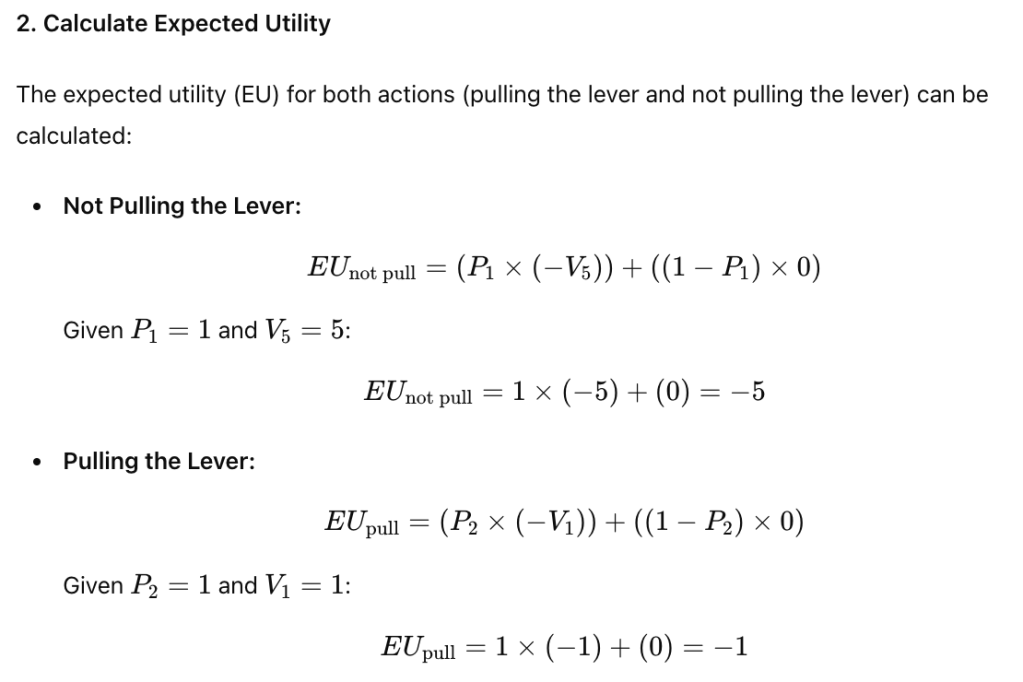

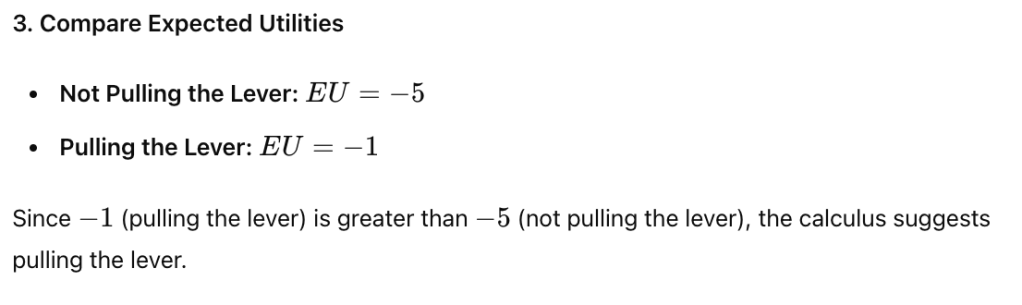

A computational ethics calculus aims to systematically evaluate the moral decisions in trolley problem scenarios using logical and quantitative methods. Here, we’ll outline a simplified example of such a calculus.

Scenario

A runaway trolley is heading towards five people tied to a track. You are standing next to a lever that can divert the trolley onto another track, where one person is tied. The question is whether to pull the lever to save five people at the cost of one.

Step-by-Step Computational Ethics Calculus

5. Decision-Making Algorithm

An algorithm can be created to automate this calculus, factoring in different ethical theories and variables:

def trolley_problem_calculus(P1, P2, V1, V5):

EU_not_pull = P1 * (-V5)

EU_pull = P2 * (-V1)

if EU_pull > EU_not_pull:

return "Pull the lever"

else:

return "Do not pull the lever"

# Example values

P1 = 1 # Probability that five will die if not diverted

P2 = 1 # Probability that one will die if diverted

V1 = 1 # Value of saving one life

V5 = 5 # Value of saving five lives

decision = trolley_problem_calculus(P1, P2, V1, V5)

print(decision)

Conclusion

This example of a computational ethics calculus for the trolley problem demonstrates how variables such as probabilities and values can be quantitatively assessed to guide ethical decision-making. While simplified, this approach can be expanded with more sophisticated models and algorithms to capture the complexity of moral dilemmas more comprehensively.

A Computational Ethics Calculus for the Trolley Problem: A Hypothetical Model

Disclaimer: This is a highly simplified model for illustrative purposes and does not represent a comprehensive or accurate ethical calculus. Real-world ethical decisions are far more complex and nuanced.

Understanding the Limitations

Before diving into the model, it’s crucial to acknowledge that the trolley problem is a simplified representation of real-world ethical dilemmas. Computational ethics, while a promising field, is still in its infancy and cannot fully capture the complexities of human morality.

A Basic Calculus

Let’s consider a simplified trolley problem where the decision is between diverting the trolley to kill one person or allowing it to proceed and kill five. We can assign numerical values to different factors:

Variables:

- N1: Number of lives saved by diverting the trolley (e.g., 5)

- N2: Number of lives lost by diverting the trolley (e.g., 1)

- D: Degree of directness of harm caused by diverting the trolley (e.g., high)

- F: Foreseeability of consequences (e.g., high)

- P: Probability of success in diverting the trolley (e.g., high)

Weights:

- W1: Weight assigned to the number of lives saved (e.g., 0.7)

- W2: Weight assigned to the number of lives lost (e.g., 0.3)

- W3: Weight assigned to the degree of directness of harm (e.g., 0.2)

- W4: Weight assigned to the foreseeability of consequences (e.g., 0.1)

- W5: Weight assigned to the probability of success (e.g., 0.1)

Calculation:

- Ethical Value (EV) = (W1 * N1) – (W2 * N2) – (W3 * D) – (W4 * F) + (W5 * P)

Example:

- EV = (0.7 * 5) – (0.3 * 1) – (0.2 * high) – (0.1 * high) + (0.1 * high)

The higher the Ethical Value, the more ethically justifiable the action of diverting the trolley might be considered according to this model.

Critiques and Considerations

- Oversimplification: This model reduces complex ethical issues to numerical values, ignoring nuances like the identity of the victims, the nature of their lives, and the potential long-term consequences.

- Weight Assignment: Determining appropriate weights for different factors is subjective and can significantly impact the outcome.

- Lack of Context: This model does not consider the broader social, cultural, or legal context in which the decision is made.

- Ethical Concerns: Using computational models for ethical decision-making raises ethical questions about accountability, transparency, and the potential for bias.

In conclusion, while computational ethics offers potential for aiding in complex decision-making, it is essential to approach it with caution and recognize its limitations. It should be used as a tool to support human judgment, not replace it.

Certainly. I’ll create a simplified example of a computational ethics calculus related to the trolley problem. This example will use a utilitarian framework, which attempts to maximize overall well-being or minimize harm. Keep in mind that this is a highly simplified model and doesn’t capture all the nuances of real-world ethical decision-making.

import math

def calculate_ethical_score(num_people_on_track, num_people_to_divert,

action_type, actor_distance, victim_age):

"""

Calculate an ethical score for a trolley problem scenario.

:param num_people_on_track: Number of people on the main track

:param num_people_to_divert: Number of people on the side track or to be sacrificed

:param action_type: 'divert' for switching tracks, 'push' for pushing someone

:param actor_distance: Distance of the actor from the action point (0-10 scale)

:param victim_age: Average age of the victims

:return: Ethical score (higher is more ethical)

"""

# Base score: difference in lives saved

base_score = num_people_on_track - num_people_to_divert

# Action type modifier

action_modifier = 1 if action_type == 'divert' else 0.7 # Pushing is considered less ethical

# Distance modifier: closer involvement is considered less ethical

distance_modifier = (actor_distance / 10)

# Age modifier: saving younger lives is given slightly more weight

age_modifier = math.exp(-victim_age / 100) # Exponential decay

# Calculate final score

ethical_score = base_score * action_modifier * distance_modifier * age_modifier

return ethical_score

# Example usage

scenario1 = calculate_ethical_score(5, 1, 'divert', 8, 40)

scenario2 = calculate_ethical_score(5, 1, 'push', 2, 40)

print(f"Scenario 1 (Divert) Ethical Score: {scenario1:.2f}")

print(f"Scenario 2 (Push) Ethical Score: {scenario2:.2f}")

# Function to compare two scenarios

def compare_scenarios(scenario1_params, scenario2_params):

score1 = calculate_ethical_score(*scenario1_params)

score2 = calculate_ethical_score(*scenario2_params)

print(f"Scenario 1 Score: {score1:.2f}")

print(f"Scenario 2 Score: {score2:.2f}")

if score1 > score2:

print("Scenario 1 is considered more ethical.")

elif score2 > score1:

print("Scenario 2 is considered more ethical.")

else:

print("Both scenarios are considered equally ethical.")

# Compare classic trolley problem scenarios

classic_dilemma = ([5, 1, 'divert', 8, 40], [5, 1, 'push', 2, 40])

compare_scenarios(*classic_dilemma)

This computational ethics calculus provides a simplified framework for evaluating trolley problem scenarios. Here’s a breakdown of the key components:

- Base score: This is calculated as the difference between the number of people saved and sacrificed, reflecting a basic utilitarian principle.

- Action type modifier: Diverting the trolley is considered more ethical than pushing someone, reflecting the common intuition that direct physical harm is worse than indirect harm.

- Distance modifier: Actions taken from a greater distance are considered more ethical, reflecting the psychological distance often associated with moral decision-making.

- Age modifier: There’s a slight preference for saving younger lives, reflecting the idea of maximizing years of life saved.

- Scenario comparison: The

compare_scenariosfunction allows for direct comparison between different trolley problem setups.

This model captures some key aspects of how people often approach trolley problems, including:

- The utilitarian consideration of lives saved vs. sacrificed

- The distinction between action and inaction

- The role of personal involvement in the action

- Consideration of the victims’ characteristics (in this case, age)

However, it’s crucial to note the limitations of this approach:

- It doesn’t capture all relevant ethical considerations (e.g., intent, rights, duties).

- The weightings are arbitrary and would need to be validated against ethical theories or empirical data.

- It assumes a utilitarian framework, which is just one of many ethical approaches.

- It doesn’t account for uncertainty or probabilistic outcomes.

This type of computational ethics can be useful for:

- Exploring the implications of different ethical assumptions

- Providing a starting point for discussions about how we weigh different moral factors

- Highlighting the challenges in creating ethical decision-making algorithms for AI systems

However, it should not be seen as a definitive solution to trolley problems or a replacement for careful moral reasoning. Instead, it’s a tool to aid in the analysis and discussion of these complex ethical dilemmas.

Quizzes

#1: What do trolley problem scenarios often highlight in terms of ethical decision-making?

Answer:

They highlight emotion-emergent values, objective moral facts, and the complexity of moral reasoning.#2: What are some of the emotional influences on moral judgments in trolley problem scenarios?

Answer:

Empathy, fear, disgust, and other emotional reactions influence moral judgments.#3: How do trolley problem scenarios challenge the notion of objective moral facts?

Answer:

They show that different ethical principles can lead to different judgments, and that moral reasoning is complex and context-sensitive.#4: Can AI agents provide meaningful insights into trolley problem solutions, and why?

Answer:

Yes, AI agents can analyze data, simulate scenarios, and evaluate ethical principles objectively, though they lack human emotional depth and subjective experience.#5: What are the main steps in a computational ethics calculus for the trolley problem?

Answer:

Define variables, calculate expected utility, compare expected utilities, incorporate additional factors, and create a decision-making algorithm.#6: What does the complexity of trolley problem scenarios suggest about moral intuitions?

Answer:

It suggests that moral intuitions may be more influenced by emotional dispositions than by objective moral standards.#7: What is the role of emotions in shaping moral judgments according to the discussion on trolley problems?

Answer:

Emotions like empathy, fear, and guilt significantly shape moral judgments and intuitions in trolley problem scenarios.#8: How can AI agents complement human ethical reasoning in trolley problem scenarios?

Answer:

By providing diverse perspectives, analyzing data patterns, and highlighting potential consequences of different decisions.#9: Why is there a challenge to moral realism posed by trolley problem scenarios?

Answer:

Because the scenarios reveal the subjective nature of moral judgments influenced by emotional factors, questioning the existence of independent objective moral facts.#10: What additional factors can be included in a computational ethics calculus to deepen the analysis of trolley problems?

Answer:

Ethical frameworks (utilitarianism, deontology), long-term consequences, and emotional impacts can be included to provide a more comprehensive analysis.1: What is the trolley problem?

Answer:

A classic thought experiment in ethics involving a moral dilemma about a runaway trolley.2: What do trolley problem scenarios reveal about human morality?

Answer:

They reveal complexities in human moral reasoning, influenced by emotions, cognition, and context.3: Does the complexity of the trolley problem suggest an objective moral standard?

Answer:

No, it suggests a lack of readily accessible, objective moral standards.4: How might AI contribute to understanding the trolley problem?

Answer:

AI could analyze data, model human decision-making, and explore alternative ethical frameworks.5: What are the limitations of using AI for the trolley problem?

Answer:

AI lacks true understanding of human values, relies on data quality, and raises ethical concerns.6: Can you provide a hypothetical computational ethics calculus for the trolley problem?

Answer:

Yes, but it would be a simplified model with limitations, involving assigning numerical values to factors like lives saved, harm caused, and probability of success.7: What are some critiques of a computational ethics calculus for the trolley problem?

Answer:

Oversimplification, subjective weight assignments, lack of context, and ethical concerns about using such models.8: Can emotions influence moral judgments in trolley problem scenarios?

Answer:

Yes, emotions play a significant role in moral judgments.9: Do different cultures have different moral intuitions about the trolley problem?

Answer:

Yes, cross-cultural studies show variations in moral judgments.10: Can trolley problems definitively answer the question of objective morality?

Answer:

No, they suggest a lack of readily accessible, objective moral standards but don’t definitively prove it.#1: What is one key aspect that trolley problem scenarios reveal about moral reasoning?

Answer:

The conflict between moral intuitions and utilitarian reasoning.#2: How do trolley problems demonstrate the context-dependence of moral judgments?

Answer:

Slight variations in trolley scenarios can lead to dramatically different moral judgments, showing that our moral decision-making is highly sensitive to context.#3: What does the variability in responses to trolley problems suggest about objective moral standards?

Answer:

It suggests that there might not be a clear, universally accessible moral standard, or that if such standards exist, discerning them in complex situations is extremely challenging for humans.#4: How might AI contribute to the analysis of trolley problems?

Answer:

AI can contribute through data analysis and pattern recognition, logical consistency checks, simulation and modeling, unbiased analysis, and interdisciplinary integration of insights.#5: What is a key limitation of AI in addressing trolley problems?

Answer:

AI lacks genuine moral experience and subjective emotions, which many argue are crucial for true moral understanding.#6: In the computational ethics calculus example, what does the “action type modifier” represent?

Answer:

The action type modifier represents the ethical distinction between diverting the trolley (considered more ethical) and pushing someone (considered less ethical).#7: What ethical framework does the provided computational ethics calculus primarily use?

Answer:

The calculus primarily uses a utilitarian framework, which attempts to maximize overall well-being or minimize harm.#8: How does the computational ethics calculus account for the age of potential victims?

Answer:

It uses an age modifier that gives slightly more weight to saving younger lives, implemented through an exponential decay function.#9: What is one key limitation of the computational ethics approach to trolley problems?

Answer:

It doesn’t capture all relevant ethical considerations, such as intent, rights, and duties, and assumes a utilitarian framework which is just one of many ethical approaches.#10: According to the discussion, what is the most promising approach for AI’s contribution to ethical reasoning about trolley problems?

Answer:

A collaborative approach where AI systems augment human moral reasoning, helping us explore the implications of our ethical intuitions and frameworks more rigorously.Provide 15 discussion questions relevant to the content above.

Discussion Questions

- How do trolley problem scenarios help us understand the role of emotions in ethical decision-making?

- What are the key differences between utilitarian and deontological approaches to trolley problems?

- Can the analysis of trolley problems provide insights into universal moral principles, or are they too context-sensitive?

- How do emotional reactions, such as empathy and fear, influence our decisions in trolley problem scenarios?

- In what ways can AI agents improve our understanding of moral dilemmas like the trolley problem?

- Should moral intuitions be considered reliable guides to ethical behavior if they are heavily influenced by emotions?

- How might cultural differences affect responses to trolley problem scenarios?

- What are the potential ethical implications of using AI to make moral decisions in real-life scenarios?

- How do trolley problem scenarios challenge the notion of objective moral standards?

- What are the limitations of a computational ethics calculus in addressing complex moral dilemmas?

- How can incorporating multiple ethical frameworks enhance the analysis of trolley problems?

- To what extent do you agree that moral intuitions are actually emotional dispositions?

- How can AI agents be designed to account for the emotional and subjective aspects of moral decision-making?

- What are the philosophical implications of delegating moral decisions to AI systems?

- How do trolley problem scenarios illustrate the complexity of balancing individual rights versus the greater good?

15 Discussion Questions

- How does the trolley problem challenge traditional ethical frameworks like utilitarianism and deontology?

- To what extent do you believe emotions are a reliable guide for moral decision-making?

- How might cultural background influence one’s response to the trolley problem?

- If AI could accurately predict the outcomes of different actions in a trolley problem scenario, would that eliminate the moral dilemma?

- Should we strive to develop a universal ethical framework applicable to all moral dilemmas, including the trolley problem?

- How does the trolley problem relate to real-world ethical issues, such as self-driving cars or medical ethics?

- To what extent can we justify harming one person to save many others? Are there limits to this principle?

- How does the concept of “duty of care” influence our moral intuitions in trolley-like scenarios?

- Should we prioritize the outcome of an action (consequentialism) or the inherent rightness or wrongness of the action itself (deontology) in making moral decisions?

- How does the trolley problem challenge our assumptions about personal responsibility and free will?

- Can we develop a computational model that truly captures the complexity of human moral reasoning?

- What are the potential dangers of relying too heavily on AI to make ethical decisions?

- How can we ensure that AI systems are developed and used in a way that aligns with human values and ethics?

- Should we consider the trolley problem as a tool for training empathy and critical thinking?

- How does the trolley problem intersect with discussions about justice and fairness?

1. Moral intuitions vs. rational calculation

How do we reconcile the conflict between our immediate moral intuitions and more calculated utilitarian reasoning in trolley problems? Which should we prioritize, and why?

2. Context-dependence of morality

Given that slight variations in trolley scenarios can lead to different moral judgments, how can we develop ethical principles that are both flexible and consistent?

3. Emotion in moral decision-making

What role should emotions play in moral reasoning? Are they a hindrance to objective moral truth, or a necessary component of ethical decision-making?

4. Universal moral truths

Do the varied responses to trolley problems across cultures suggest that there are no universal moral truths, or merely that such truths are difficult to discern?

5. AI in ethical reasoning

How might the incorporation of AI into ethical reasoning change the way we approach moral philosophy? What are the potential benefits and risks?

6. Limitations of AI in ethics

Given that AI lacks subjective experience and emotions, can it ever truly contribute to moral reasoning, or will its role always be limited to data analysis and consistency checking?

7. Computational ethics

What are the implications of reducing complex moral scenarios to numerical calculations, as in the computational ethics calculus example? Does this approach oversimplify ethics, or provide valuable insights?

8. Action vs. inaction in morality

Why do many people view active harm (like pushing someone) as more unethical than passive harm (like diverting a trolley)? Is this distinction morally justified?

9. Distance and moral responsibility

How does psychological or physical distance from an action affect our moral judgments? Should it affect them?

10. Age and ethical value

Is it ethically justifiable to assign different values to lives based on age, as the computational model does? What are the implications of such an approach?

11. Utilitarianism in AI ethics

Given that many AI approaches to ethics (including our example) use utilitarian frameworks, what are the potential consequences of implementing such systems in real-world decision-making?

12. Ethical frameworks in AI

How can we ensure that AI systems consider a range of ethical frameworks beyond utilitarianism? Is it possible to create an AI that can switch between or integrate multiple ethical approaches?

13. Real-world applications

How might insights from trolley problems and their computational analysis be applied to real-world ethical dilemmas, such as autonomous vehicle decision-making or resource allocation in healthcare?

14. Moral uncertainty

Given the complexity revealed by trolley problems, how should we approach moral decision-making in situations of deep uncertainty? What role might AI play in navigating this uncertainty?

15. Future of moral philosophy

As AI becomes more advanced, how might the field of moral philosophy evolve? Will traditional philosophical methods be supplanted by computational approaches, or will they remain essential?

Table of Contents: (Click any link below to navigate to that section.)

- What do trolley problem scenarios reveal? Emotion-emergent values? Objective moral facts? Something else?

- Does the complexity of the trolley problem strongly suggest there is no objective moral standard readily accessible to humans and that “moral intuitions” are actually emotional dispositions?

- Can AI agents now or in the future contribute to meaningful insights into trolley problem “solutions”? Why or why not?

- Can you produce an example of a computational ethics calculus related to the trolley problem?

- Quizzes

- Provide 15 discussion questions relevant to the content above.

Leave a comment